CUSTOMER USE CASES

BIG DATA REASONING

Modern enterprises are at a cross roads with the advent of Generative AI. LitenAI's mission is to help enterprises tap into the vast data and knowledge within these companies. For that mission, it has developed a big data copilot with integrated Gen AI agents and big data base clusters to enable much faster response times.

TURBOCHARGE PRODUCTIVITY

At one of our client sites, training new engineers necessitated the presence of experienced ones. LitenAI optimized this process by leveraging existing playbooks and cloud server datasets to refine the models. Additionally, it incorporated customer-specific prompt engineering to elicit expert, human-like responses from the Liten platform.

A newly onboarded engineer could inquire about debugging status code errors, various log files, or inquire about the most significant issues from the past month and receive expert, human-like responses.

NATURAL LANGUAGE BASED REASONING

Customers often feel hesitant about learning a new query language. The Liten platform addresses this concern by offering a natural language interface for query specification. Its code AI agent translates these specifications into SQL queries, enabling customers to use them seamlessly. Moreover, the platform integrates sophisticated visualization tools, empowering users to create and visualize cloud-based dashboards effortlessly. All these functionalities are orchestrated through a master agent, accessible via a unified natural language interface.

Liten's models serve as invaluable aids to all cloud engineers—CIOs, SREs, DevOps engineers, and more—acting as co-pilots to significantly enhance productivity.

STREAMLINED EVENT PUBLISHING

In one of our customers, many alerts are being issued for the same event. Liten can streamline the flow. For this, it stores the alerts. Using its models, it can detect similar events and produce consolidated messages in Slack bots. The flow looks like this

SAMPLE USER REASONING SESSIONS

LitenAI possesses the capability to comprehend diverse log file formats. Its adaptable data model allows for seamless extensions, facilitating the comprehension of customer-specific data. The finely-tuned models within LitenAI are adept at understanding domain-specific knowledge. Moreover, LitenAI can fine-tune models using customer data exclusively for their specific use cases.

SERVER LOG REASONING

SERVER LOG REASONING

LitenAI models are finely tuned to execute server log reasoning with an expertise akin to an expert. Here are several examples showcasing the range of analysis it can perform.

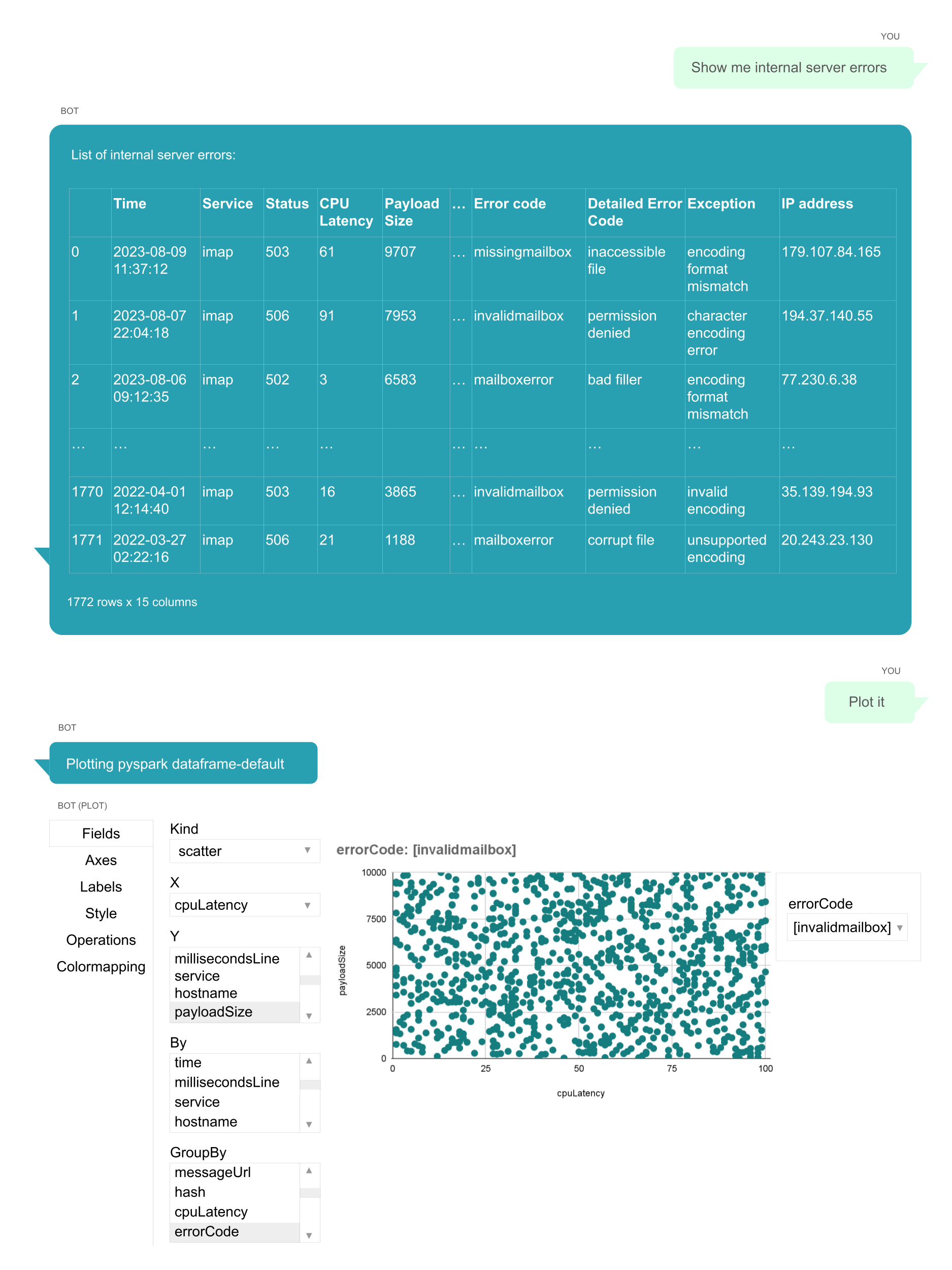

Internal server error analysis

Users have the ability to upload their data for analysis through LitenAI's platform. Additionally, LitenAI manages SQL tables and ingests data into these tables. This enables customers to execute queries, create visualizations, and deduce insights from the data. Moreover, LitenAI conducts advanced analysis and coordinates both data and AI agents to fulfill various tasks for the users.

LitenAI is capable of analyzing data and offering error analysis as part of its functionalities.

Customers performed various tasks using chat interfaces like

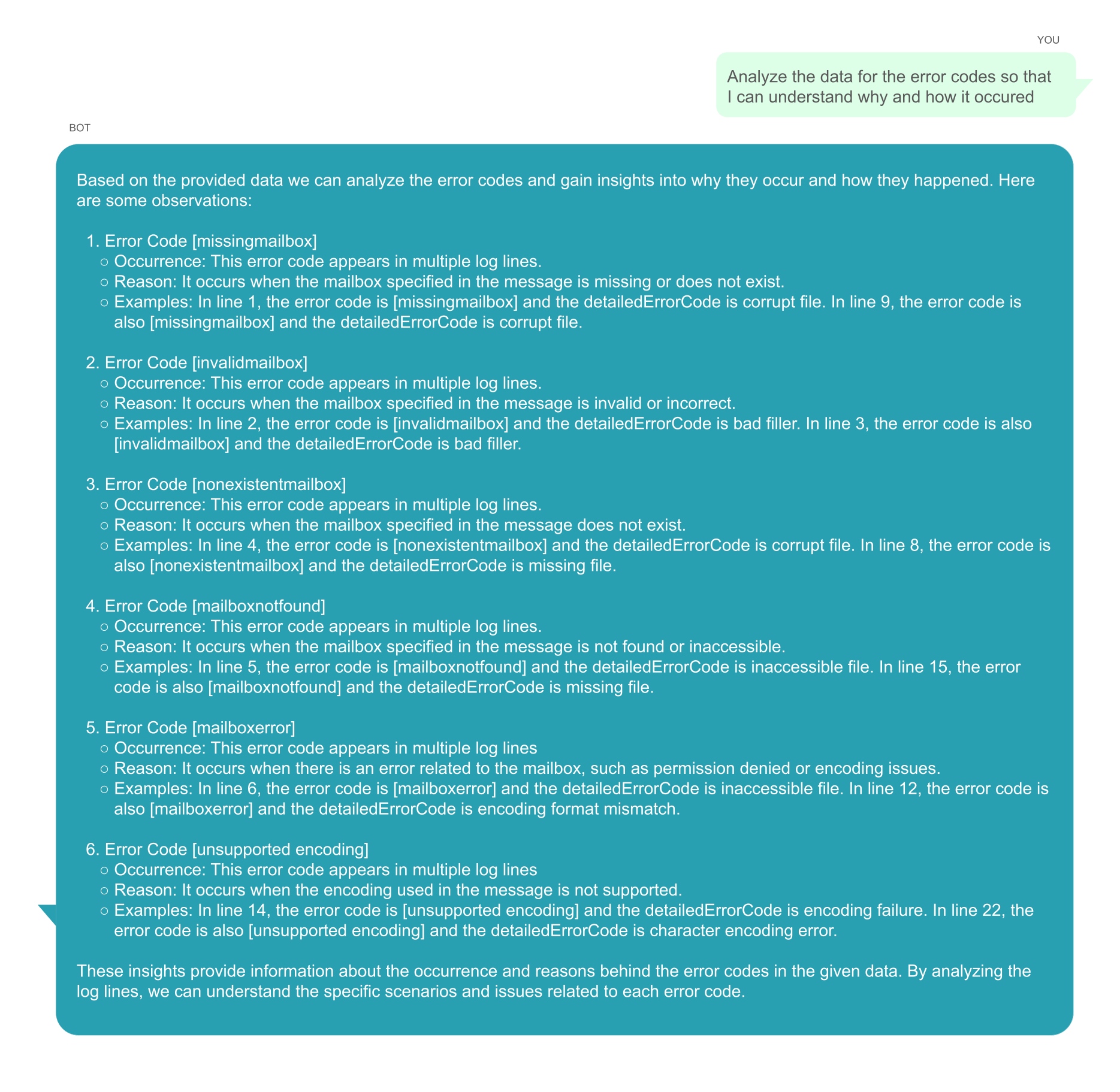

LINUX LOG EXPERT ANALYSIS

LINUX LOG EXPERT ANALYSIS

The Linux operating system generates diverse log files including system logs, application logs, and event logs. LitenAI comprehends these log files and has the capability to analyze failures, offering potential solutions accompanied by code.

Analysis of syslog error

Interpreting Linux system logs can be challenging. Liten stores these logs and conducts comprehensive analyses to derive valuable insights. Refer to the following chat for an example of the analysis.

Users have the ability to inquire about different aspects of Linux log data, such as

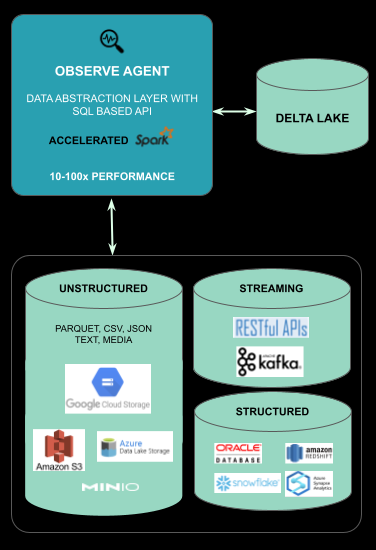

ACCELERATED BIG DATA OBSERVATION

Modern enterprises are experiencing an unprecedented surge in data creation, where vast amounts of information are generated constantly. This expansion coincides with significant advances in cloud technology, marked by disaggregated systems. While there are significant and continuous hardware advancements, the organizations struggle to meet the ever increasing demands for performance improvements, reduction in cycle times and to save on computational and cloud costs. The organizations are also in a race to combat climate change and meet their sustainability targets.

Employing a unique tensor representation, the data agents within LitenAI can accelerate the queries by a huge multiplication factor of 50-100x thus helping the organizations achieve the twin goals of performance with sustainable computing while also saving costs in a competitive environment.

ACCELERATING DATA AGENT BY 100x

Current relational and tabular data platforms lack adaptation to leverage emerging technologies. Employing a unique tensor representation, the data agents within LitenAI accelerate queries by a factor of 100. They transform incoming data into a tensor-formatted columnar structure, optimizing processor and accelerated solutions to enhance the speed of existing queries. LitenAI seamlessly integrates into Spark clusters and can efficiently ingest data from various data warehouses.

LitenAI seamlessly operates within existing or new SparkTM clusters as a service. Once activated, it utilizes jar files in Spark tasks to perform accelerated functions through LitenAI. Jobs executed by customers maintain their settings but experience enhanced performance. LitenAI accelerates various tasks such as filters and joins, typically found in commonly used SQL query plans. Additionally, LitenAI can construct customized accelerated UDFs (User Defined Functions) tailored to individual customer requirements. These UDFs can be applied within queries or used as standalone functions, offering valuable solutions for specific customer use cases.

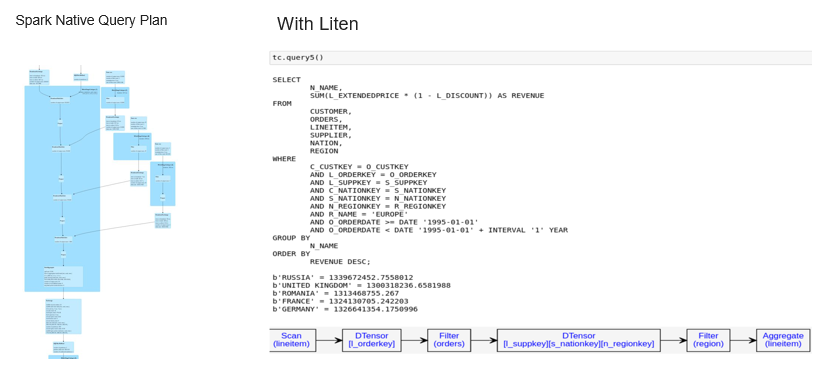

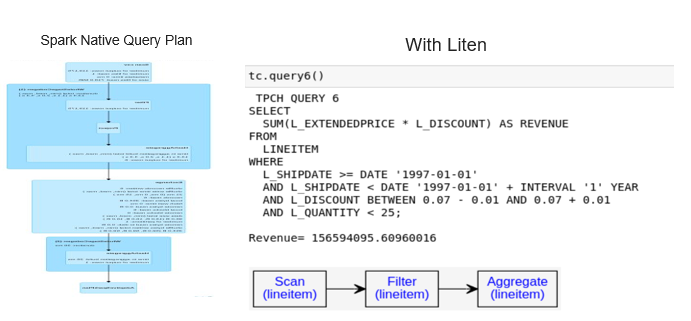

BENCHMARK TPCH QUERY

The Transaction Processing Performance Council (TPC) sets industry-standard benchmarks for data and query performance. TPC BenchmarkTM H is a decision support benchmark. Benchmark tests are conducted for Query 5 and Query 6 of TPCH because they have complex joins and result in longer query plans. These tests involved utilizing both a standalone Spark cluster and a separate Liten service. Liten enhanced query performance through a tensor-based engine and preserved an in-memory cache of tensors to eliminate redundant creation processes.

TPCH Query 5

TPCH Query 5

This query compiles the revenue generated from local suppliers.

LitenAI expedites this process by eliminating the necessity for joins. Instead, tensor data replaces joins with more straightforward multi-dimensional lookups. This streamlines operations by minimizing shuffle operations, resulting in a significant acceleration of the query.

TPCH Query 6

TPCH Query 6

This query assesses the potential revenue growth achievable by removing company-wide discounts.

With LitenAI, the query plan is simplified along with accelerated scan/aggregates. On a standard Azure DS2 VM, Spark 3.2 required 16 seconds to execute, whereas Liten completed the task in a mere 0.06 seconds, delivering over a 100x performance improvement.