Our customers often spend significant time cleaning incoming raw data before it can be ingested into clean, structured tables. With LitenAI, these tasks can be performed simply by using natural language prompts. The agent reads the files, removes or modifies entries, and converts the data into the desired table structure or schema before writing it to a persistent table. This process reduces manual effort for engineers and empowers non-engineers to accomplish the same tasks effortlessly.

Previously, customers struggled to get precise answers from their AI chat tools — responses were too broad and failed to take context into account. In this solution, we ingest data into the LitenAI Smart Lake, and the LitenAI Agentic Flow completes the data processing based on customer requests.

In this blog, we demonstrate how this works through a chat interface, but it can also be used programmatically in notebooks via the litenai package.

LitenAI is available now on demand. Please contact us to try it out now.

In the smartlake, there are two tables one containing raw menu and another one cleaned up. The tables can be created when a file is ingested, or it can be created using LitenAI smart lake. Scroll down to see the lake details.

Below are the general steps showing how raw data can be cleaned and loaded:

Data table structures

We will start by reading the raw and JSON files into the Smart Lake. For this demonstration, I’ve created a new lake called menus, where all related tables and data will reside.

All incoming files are ingested into a table called menuraw. The fields and data types for this table can be automatically inferred from the content, so there’s no need to define the schema in advance. However, if the schema is already known, the table can also be created beforehand using a schema JSON structure.

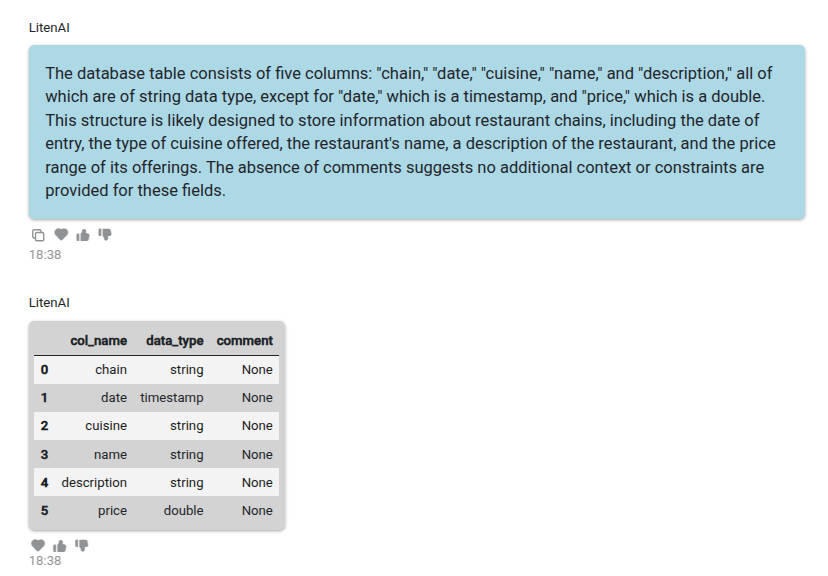

You can describe and generate these tables using a simple natural language prompt.

Describe menus.menuraw table

Chat bot would show the menuraw table structure:

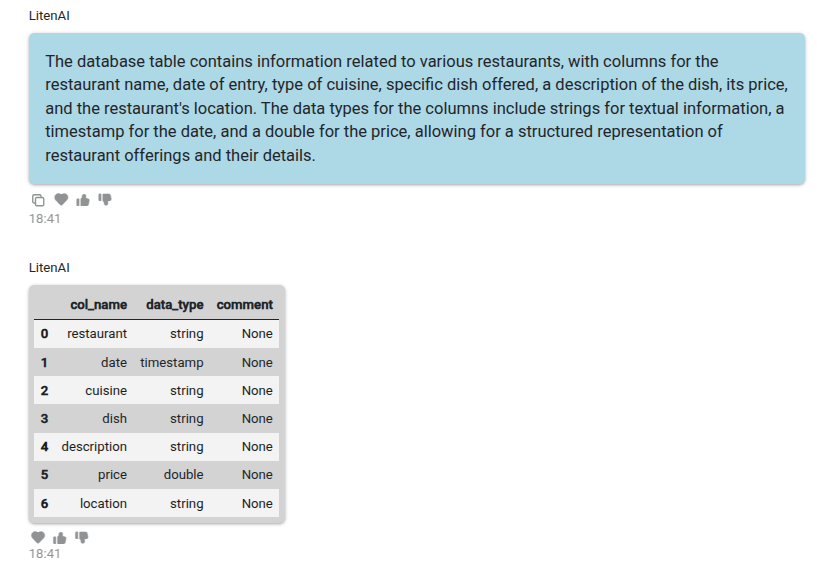

All cleaned up data is added to the menu table. You can view the structure of the menu table by using a prompt like this:

Describe menus.menu table

The following describes the menu table. You can view its schema and details here.

You’ll notice that the table structures differ, and some rows may contain incorrect information that needs to be cleaned.

Demo files are included with the Smart Lake. See the smart lake section below for instructions if you want to upload menu files.

Cleaning and writing the new table

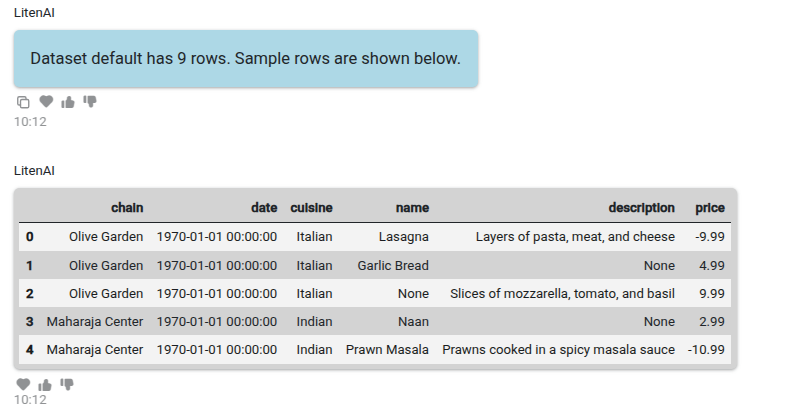

You can review the menuraw table if needed. Use prompts like the ones below to explore its contents.

Count the number of rows in menus.menuraw table.

It will show the total rows outputs:

You can also search for rows having negative or null values.

Generate sql to select rows from menus.menuraw table any of its column having null entries or if price is negative. Execute the sql.

This should generate the sql and execute it.

You can use a prompt to generate code for the specific cleanup you need. Be as precise as possible in your request — this helps generate accurate code. The code can also be executed directly from the same prompt.

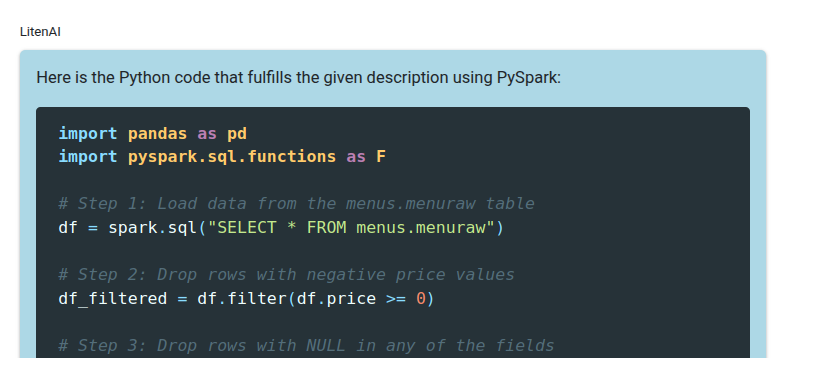

In this example, I’ll first generate the cleanup code and then run it.

Create python code for table menus.menuraw dropping all rows having negative price value. Create a dataset dropping all the rows with NULL in any of the fields. In this dataset, rename chain field to restaurant. Also, add another field called location. For ‘Casa Mexicana’ and ‘Maharaja Center’ restaurant, location should be San Jose. For ‘Mandarin House’ and ‘Olive Garden’ the location should be San Francisco. Save the final output dataset into menus.menu table. Use dfsout name for the final output dataset.

The generated code includes the available data and execution context. It is also validated to ensure accuracy. An example of the generated code is shown below.

This code can now be executed.

Execute the generated python code.

It would execute, and the following output is displayed.

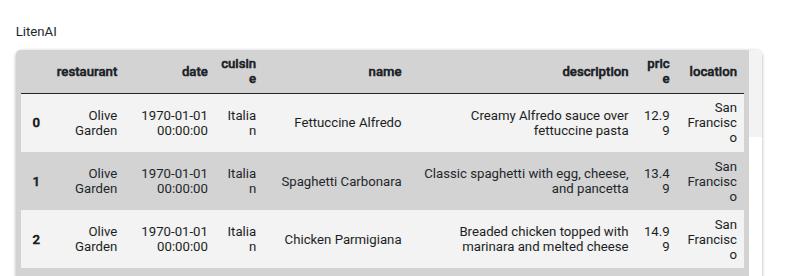

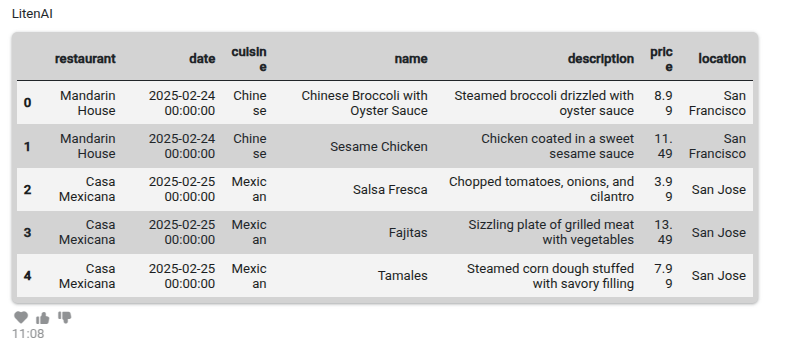

You can review the output data or visualize it to ensure everything looks correct. Below are a few example prompts and their outputs.

Generate SQL to select 5 rows of menus.menu table. Execute the code.

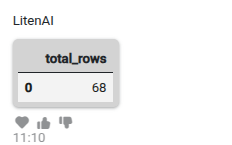

Count the number of rows in menus.menu table

In the output, you can see that 12 rows were dropped due to invalid data. The row count decreased from 80 in menuraw to 68 in menu. You can also verify that a valid location is now included in the table.

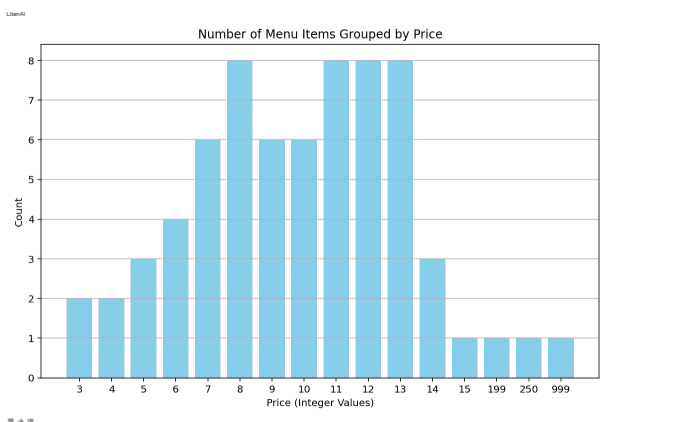

Additionally, you can generate plots as needed to further inspect the data.

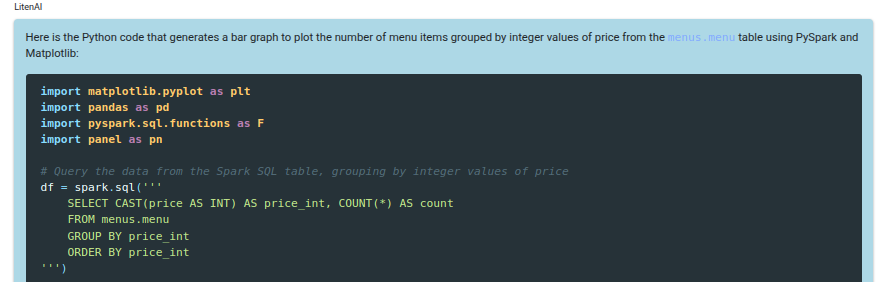

Generate python to plot the number of menu items grouped by integer values of price from menus.menu table. Use a bar graph.

Execute the code.

Smart Lake

In the LitenAI Smart Lake, the customer ingested their data and connected to their required databases. All data is securely stored within the customer’s own storage environment. Data can be ingested in multiple ways — either programmatically from stored files or through streaming for continuous ingestion. Additionally, data can be uploaded and managed using Lake Agents, either via chat or through the Lake GUI.

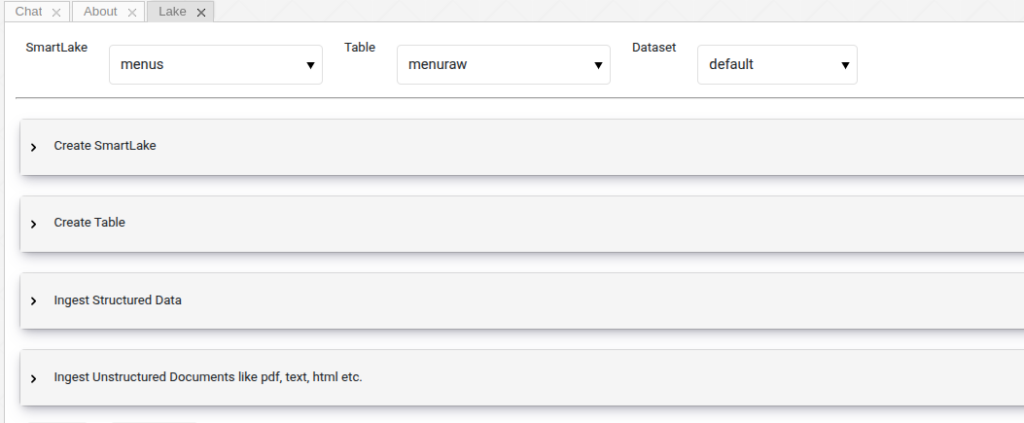

If you are following along with these prompts, make sure to select the menus data lake. Go to the Lake tab and ensure that menus lake is selected. See the screenshot below for reference.

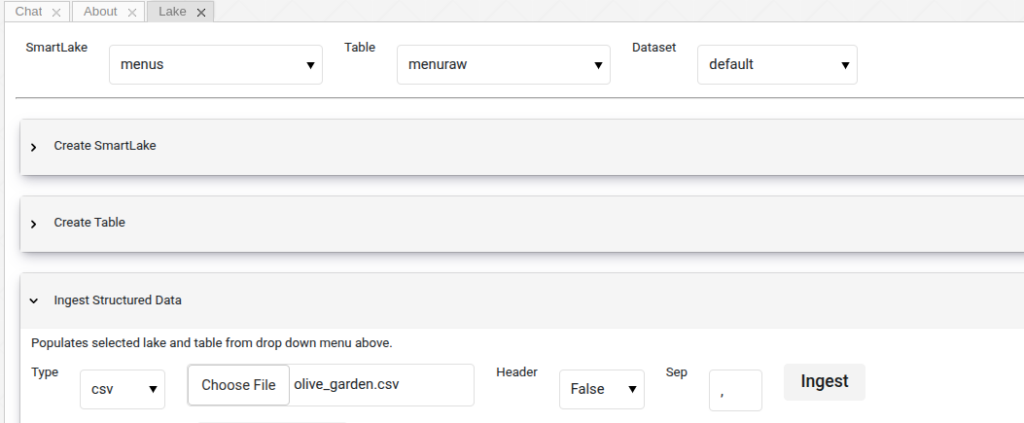

We can load files into the menuraw table. The demo files are loaded with the litenai demo. However, to load them, simply select a file from the menu and click the Ingest button as shown below.

In this example, we demonstrated how data can be cleaned and loaded into another table using simple prompts. With LitenAI, data engineering tasks that once required manual effort from engineers can now be handled automatically by intelligent agents. These agents can understand instructions, generate the necessary code, and execute the operations seamlessly. This not only saves time but also makes complex data tasks accessible to non-engineers.

Please contact us to get started, we value your feedback and look forward to helping resolve your challenges.