Traditional log analytics tools fall short when diagnosing complex, cross-system issues due to limited context and lack of reasoning. LitenAI addresses these limitations with AI agents that reason, correlate, and explore operational data through a natural interface.

LitenAI agents analyze application logs, uncover key failure patterns, and recommend effective mitigation. By combining log data with domain knowledge, LitenAI enables proactive, insight-driven debugging at scale.

Why Traditional Log Analytics Tools Fall Short

Conventional log analytics tools require users to know what to look for and how to ask for it. They rely on predefined dashboards, rigid filter rules, and manual queries—often in proprietary or complex query languages. Users must understand how different logs relate across systems and services, and even then, meaningful insights often require escalation to domain experts for deeper root cause analysis.

These tools struggle when diagnosing complex, cross-system issues:

Conventional log analytics tools require users to know what to look for and how to ask for it. They depend on predefined dashboards, rigid filter rules, and manual queries—often in proprietary or complex query languages. Users must understand how different logs relate across systems and services, and even then, meaningful insights often require escalation to domain experts for deeper root cause analysis. These tools fall short when diagnosing complex, cross-system issues.

- Contextual Blindness: They don’t connect logs to broader operational data like source code, past tickets, engineering knowledge, or documentation.

- Limited Reasoning: They aggregate and visualize—but cannot explain why a problem is happening or how to fix it.

- Manual Overhead: Analysts must piece together evidence across tools, often writing complex queries or scripts.

While AI tools have become powerful enough to automate some of this manual engineering work, traditional AI tools still fall short. Generic AI tools offer broad, detached explanations that lack grounding in real customer environments—often resulting in vague or misleading answers.

How LitenAI Breaks Through These Barriers

At LitenAI, we’ve developed customized AI models and agents specifically for log analytics. These agents work directly on application log data to automate analysis, reasoning, and resolution—efficiently and at scale.

Customers ingest logs, tickets, metrics, documentation, and metadata into a secure AI database. From there, the LitenAI Agentic AI platform initiates multi-step reasoning flows that:

- Generate precise SQL queries based on user intent and schema

- Correlate data across different sources

- Deliver root cause insights, not just metrics

- Recommend mitigation backed by prior resolutions and real-time evidence

Instead of searching dashboards or wrestling with log syntax, teams engage through conversation. LitenAI reasons, investigates, and explains—adapting to each environment with every prompt. This conversational interface and deep contextual awareness enable LitenAI to deliver precise, targeted answers tailored to each customer’s operational context.

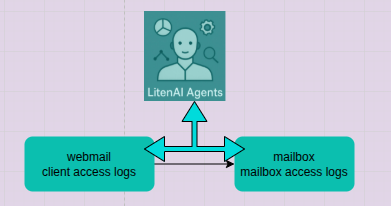

LitenAI Database and Agents

LitenAI provides an AI-powered database designed to store logs, metrics, and documents at petabyte scale. Built on a lake house architecture using object storage, it offers dramatically lower ingestion and storage costs compared to traditional log systems. LitenAI integrates seamlessly with existing data sources through streams or database connections.

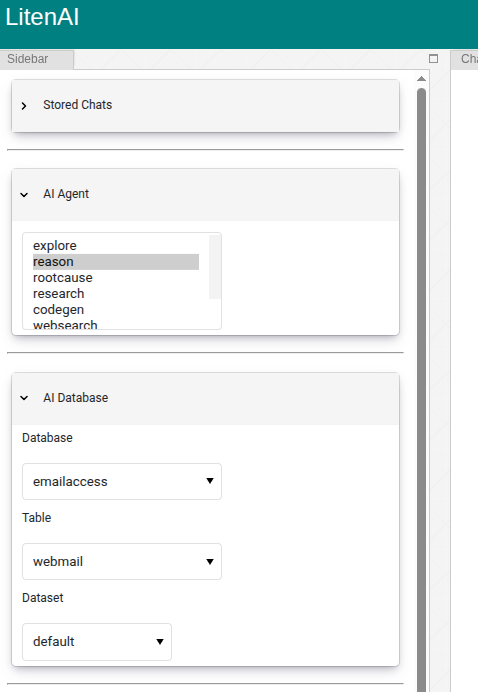

Users can create and ingest data into these databases programmatically or via a graphical interface. AI databases and agents can be selected through the UI or code, and the agents —available as MCP Servers— can also operate within an AI agentic web.

For the analysis below, select the AI database named emailaccess, which includes two ingested logs:

- webmail: captures webmail UI requests

- mailbox: records mailbox-level operations

In the UI side bar, see below and ensure that the right database and agents are selected for the tutorials shown below.

Root Cause Analysis in Email Access Logs

A customer had been experiencing frequent issues where users were unable to access their mailbox. As discussed earlier, the underlying system includes two primary logs – webmail and mailbox

Traditional log tools couldn’t connect the dots between these layers or explain why mailbox access was failing. With LitenAI, the customer was able to:

- Prompt LitenAI to investigate why mailbox access errors were occurring so often.

- Receive not just raw query results, but a structured root cause analysis and recommended mitigation, backed by log evidence.

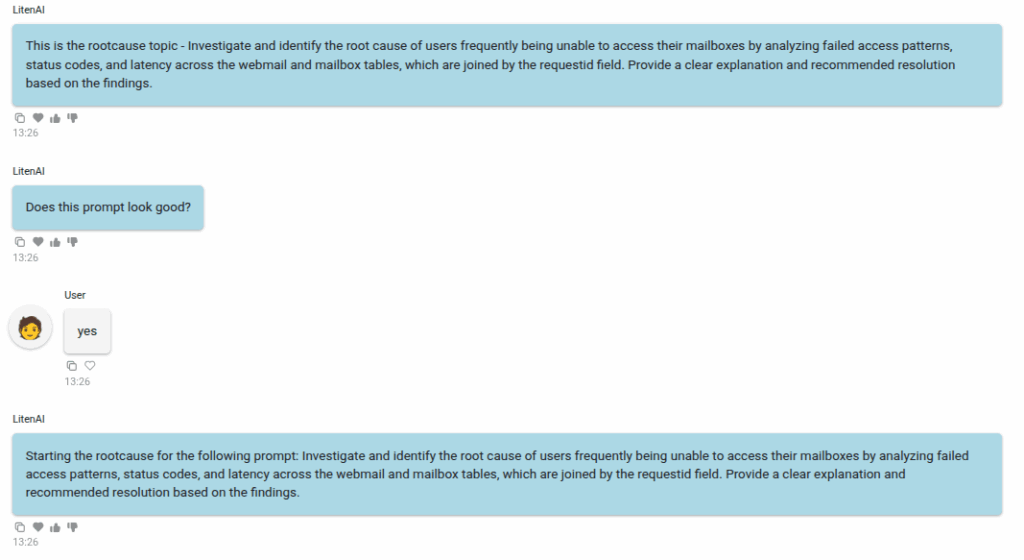

Root Cause Agent: A single agent to root cause and mitigation

Choose the rootcause Agent from the AI agent list and prompt it to root cause.

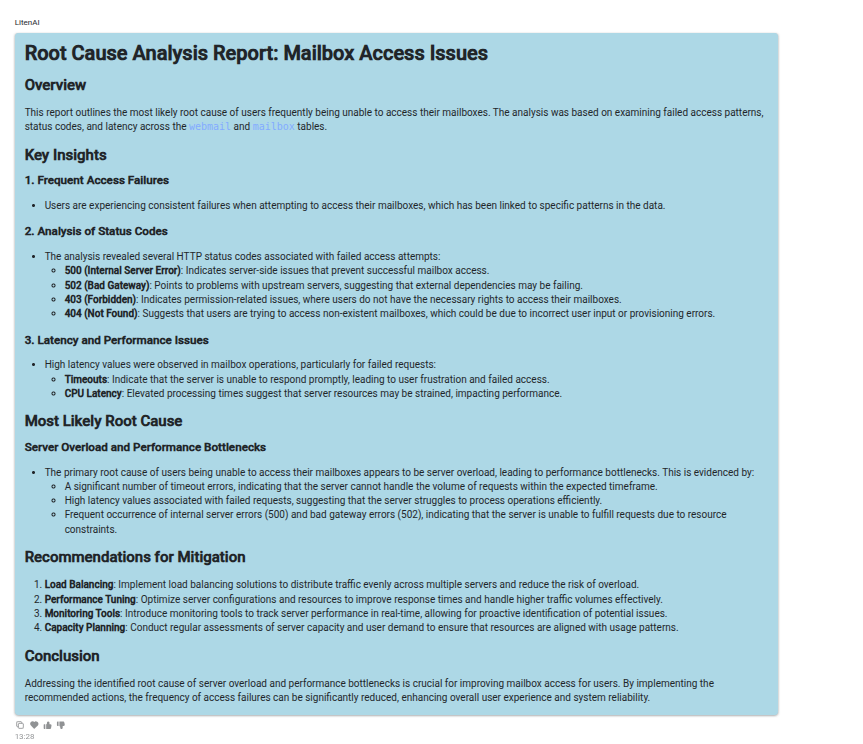

Users are frequently unable to access their mailboxes. The webmail table contains webmail request logs, and the mailbox table contains mailbox operation logs. joined by requestid field. Investigate and identify the root cause by analyzing failed access patterns, status codes, and latency across both tables. Provide a clear explanation and recommended resolution based on the findings.

LitenAI starts by asking follow-up questions to clarify your prompt.

Once the prompt is clarified, LitenAI initiates the root cause analysis. Its reasoning models evaluate the context and the system calls involved. Based on this, it dynamically selects the appropriate tools—such as reasoning, search, and analysis agents—to identify and mitigate the issue.

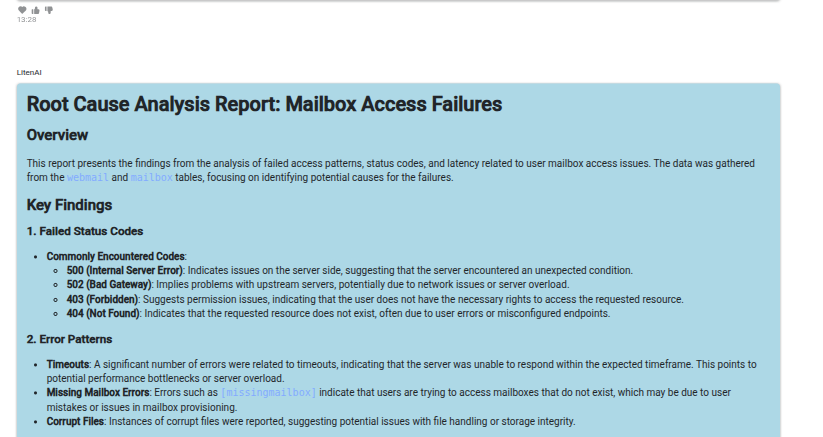

In this case, the agent begins by creating a plan. It retrieves the relevant log data and analyzes it to determine the most probable cause.

It then retrieves customer knowledge using the RAG agent and continues deeper analysis. This process iterates until enough data is gathered to identify a probable cause.

The most likely causes are then synthesized, and a list of possible root causes is generated.

Using all available data, LitenAI determines the most probable cause and suggests appropriate mitigation.

Reason and Explorer Agents: Drill down using natural chats

You can search, analyze, visualize using natural chats. We are showing a few prompt playbooks that the user can use to investigate.

LitenAI Prompt Playbook: Investigating Email Access Issues

Before using these prompts, ensure the emailaccess database is selected, and your webmail and mailbox tables are available in the lake. Make sure that reason is selected as Liten AI Agent.

🔍 Analyze mailbox 5xx Errors

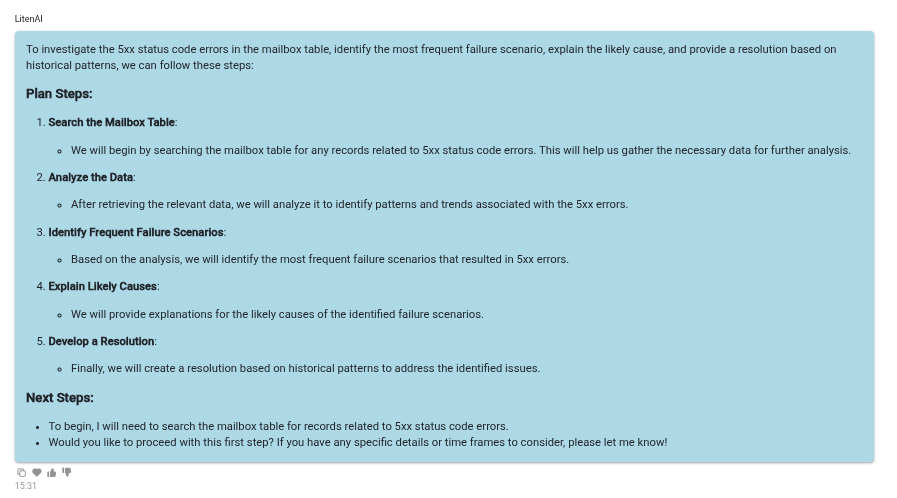

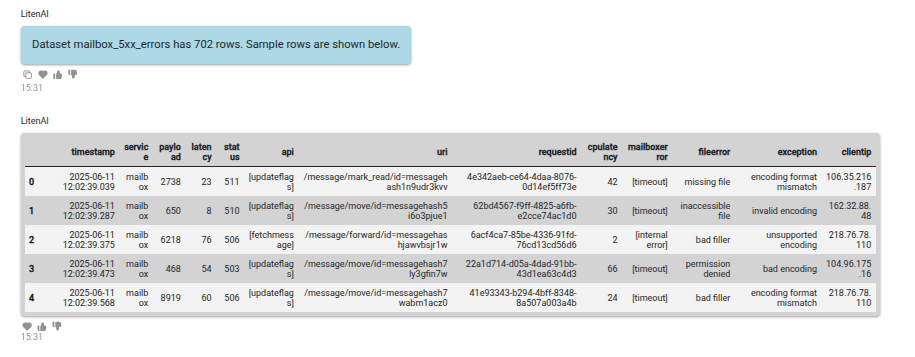

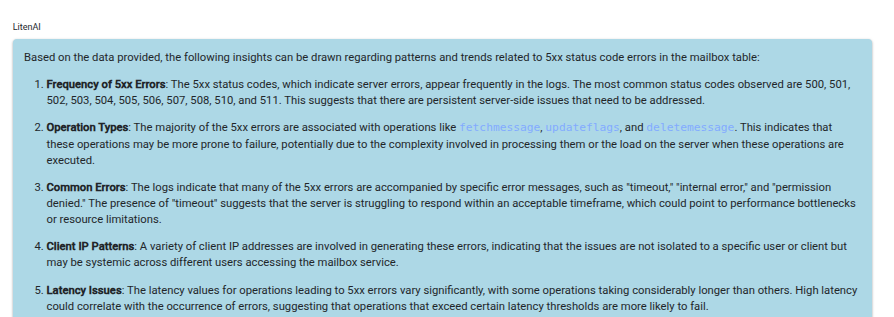

Investigate 5xx status code errors in the mailbox table using reason agent. Identify the most frequent failure scenario and explain the likely cause. Provide a resolution based on historical patterns.

✅ Use this when: Customers report frequent mailbox failures or timeouts.

In its reasoning, it first makes a plan.

It then executes the query and gets the data.

It then analyzes the produces the likely issues.

🧠 Debug Latency In webmail service

Reason out the high-latency cases from mailbox table. Pick top 100 worst latency records. Focus on status codes and error patterns.Identify the status code most correlated with latency spikes and explain the cause.

✅ Use this when: Customers report frequent mailbox failures or timeouts.

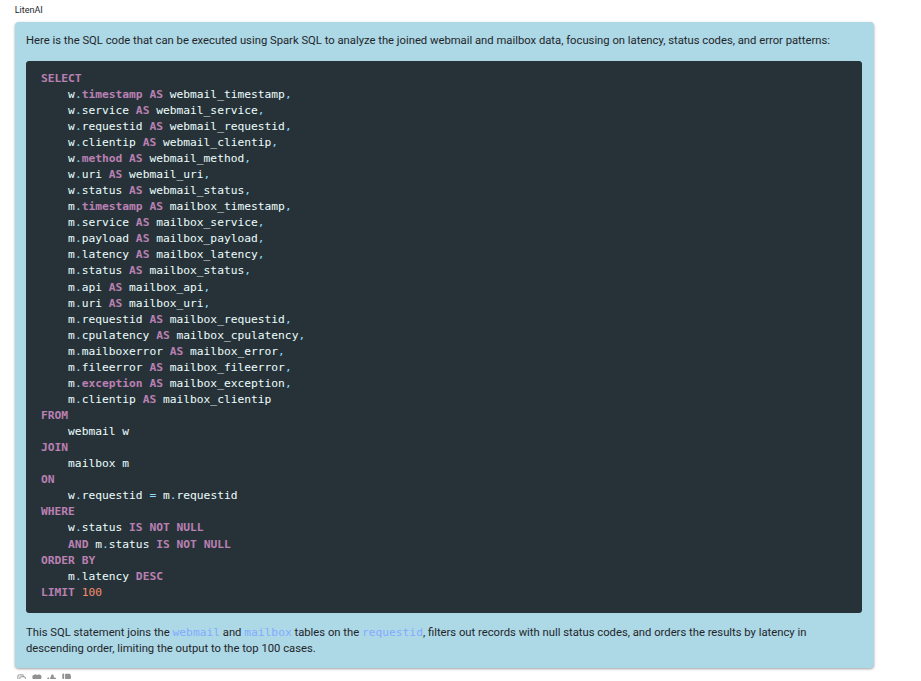

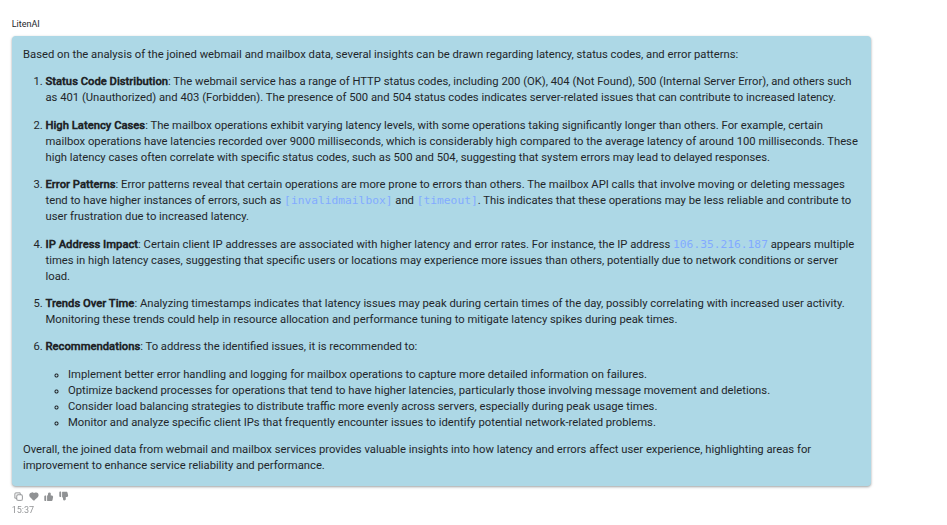

In this case, after planning it first generates a sql query joining the table and getting the 100 worst latency values.

This code is then executed to produce the result. An analysis gives likely causes.

📊 Interactive Failure Visualization

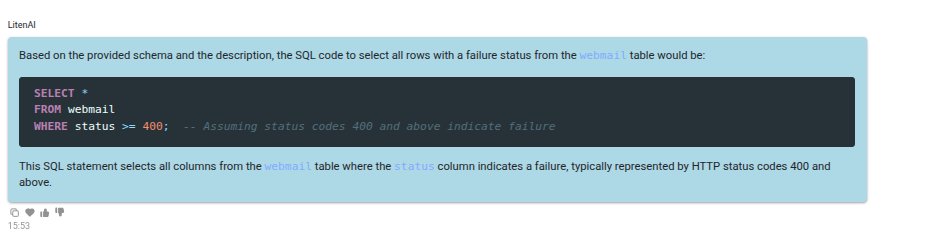

Use SQL to select all the rows with failure status from webmail table.

✅ Use this when: Customers report frequent mailbox failures.

LitenAI will generate the SQL code. Once executed, the results will be stored in an in-memory dataset for further analysis.

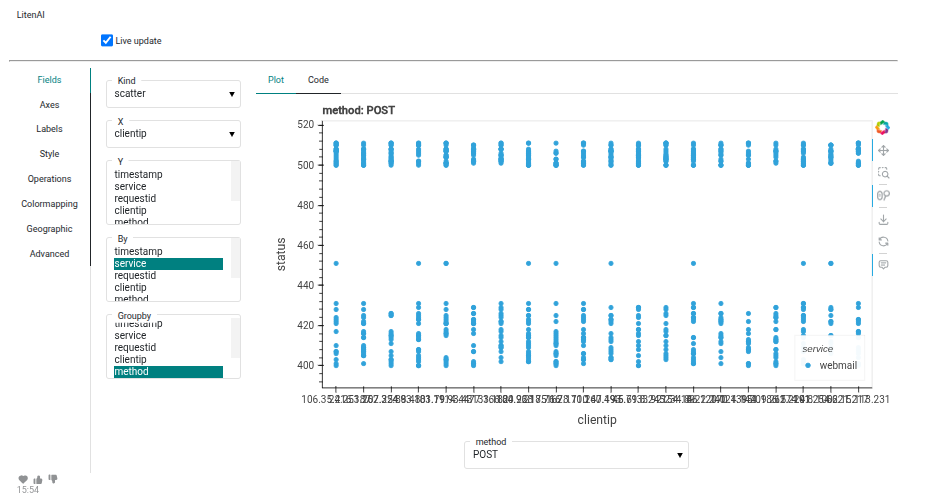

To generate an interactive visualization, prompt LitenAI to plot the data.

Plot the data.

Select appropriate values for X-axis, Y-axis, grouping, and filtering to enable interactive exploration.

📊 Visualize Access Failures and Trends

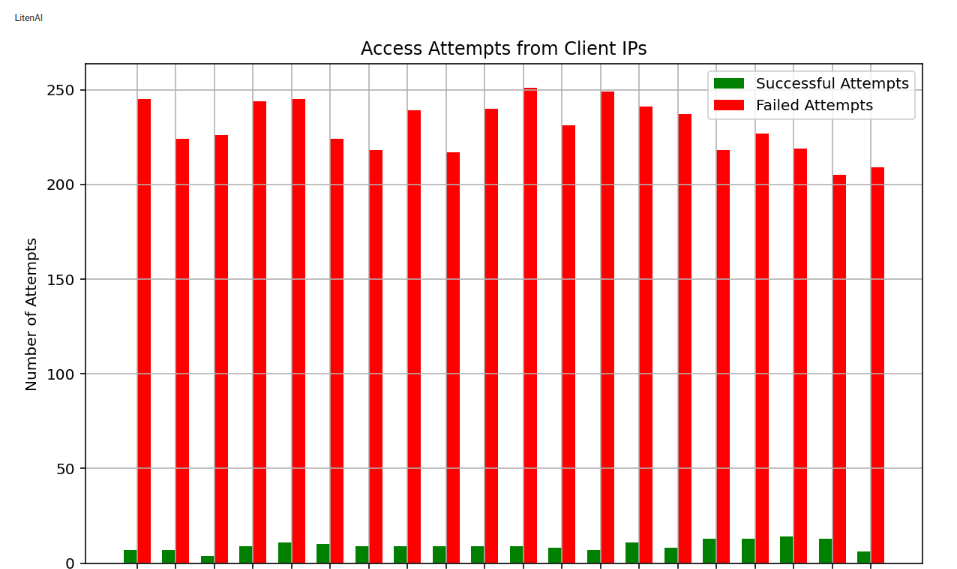

Create a bar chart of access attempts from client IPs from mailbox table. For each client IP, in the bar, show successful and failed attempts in different color.

✅ Use this when: Customers report frequent mailbox failures or timeouts.

It first generates python code.

Once executed it will show a plot like the following.

Research Agent: Obtain hidden insights from your data

Sometimes, you may want to take a broader view of an issue to uncover underlying insights. For example, in the case below, we analyze access failures to identify deeper patterns.

Meet the LitenAI Research Agent

LitenAI doesn’t stop at surface-level queries. The Research Agent conducts deeper, autonomous investigations—correlating logs, documents, tickets, and past incidents to uncover non-obvious insights. It builds a contextual understanding of system behavior over time, surfacing patterns and anomalies that typical tools miss.

This agent excels at exploratory analysis, incident retrospectives, and strategic debugging, drawing on:

- Historical behavior patterns

- Semantic understanding of documentation and prior tickets

- Cross-source correlation using Smart Lake queries

How the Research Agent Operates Behind the Scenes

- Planner Agent: Constructs a multi-step research plan to explore the problem space.

- Search Agent: Identifies relevant logs, incidents, or knowledge assets.

- Code Generator Agent: Writes optimized queries to probe hypotheses.

- Execution Agent: Runs large-scale analysis over structured and unstructured data.

- Analysis & Visualization Agents: Summarize results, identify anomalies, and present visual insights.

Example LitenAI Research on email access data

Before using these prompts, ensure the emailaccess database is selected, and your webmail and mailbox tables are available in the lake. Make sure that research is selected as Liten AI Agent.

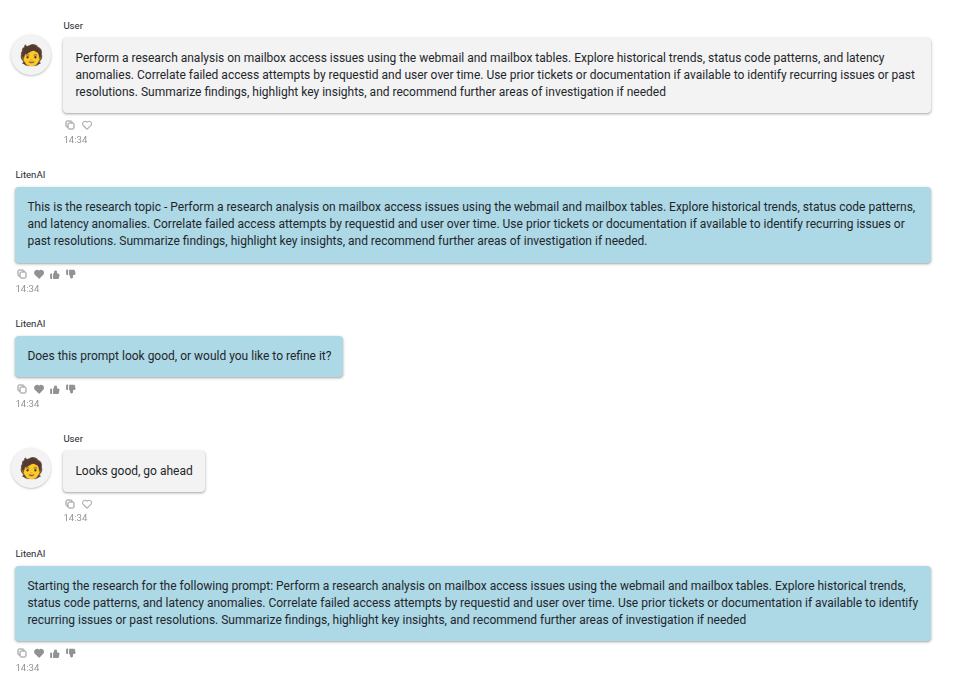

Initiate the research by providing a prompt.

Perform a research analysis on mailbox access issues using the webmail and mailbox tables. Explore historical trends, status code patterns, and latency anomalies. Correlate failed access attempts by requestid and user over time. Use prior tickets or documentation if available to identify recurring issues or past resolutions. Summarize findings, highlight key insights, and recommend further areas of investigation if needed.

LitenAI begins by interpreting and clarifying the prompt.

Once the prompt is clarified, LitenAI begins the research process. Its research models evaluate the context and system activity, then dynamically invoke the appropriate tools to gather deeper insights.

The agent leverages its capabilities—such as reasoning, search, and analysis—to conduct an in-depth investigation.

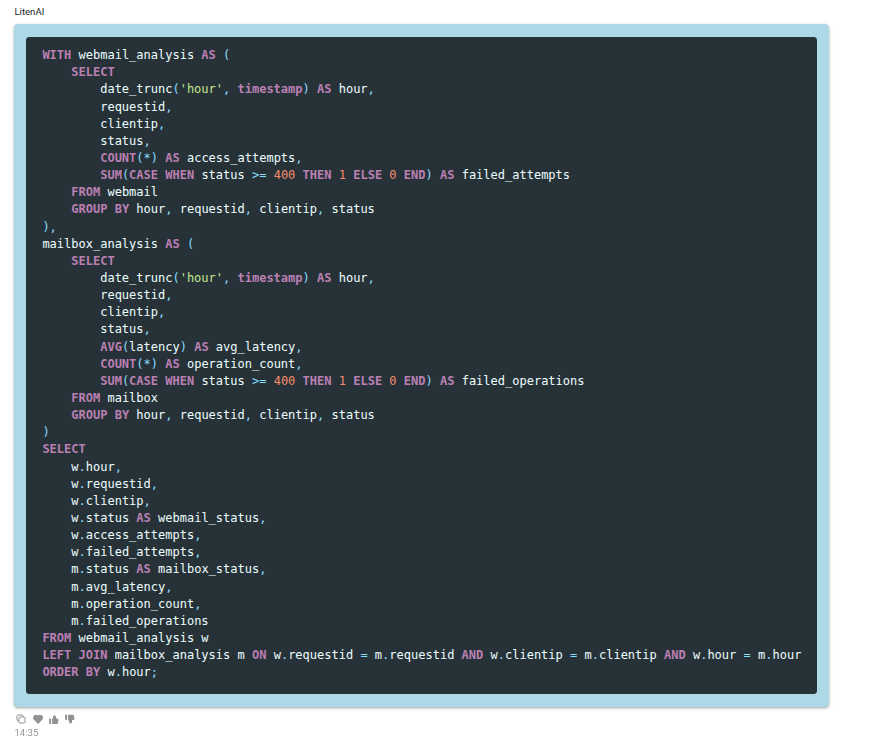

In this case, it starts by retrieving relevant data, generating the necessary query code, and performing targeted analysis.

This is how a typical query can look like.

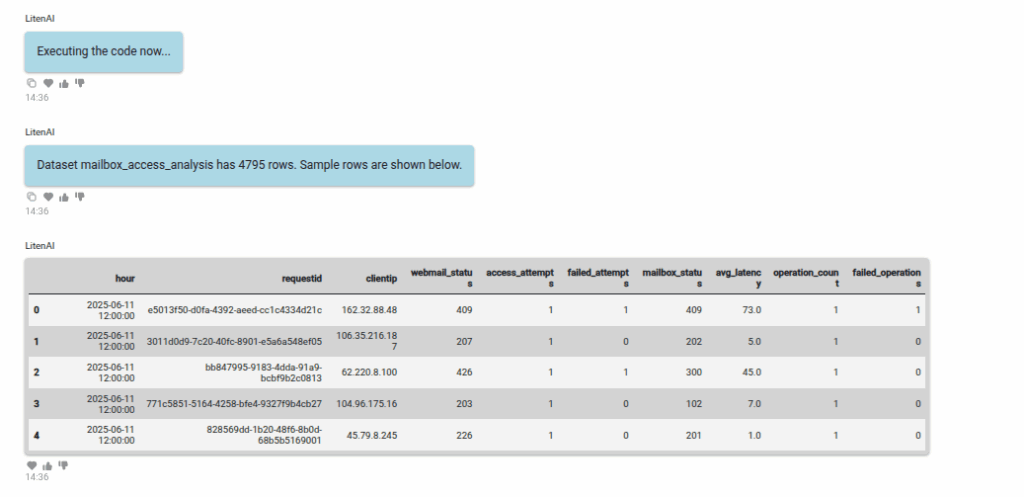

It then executes the query to retrieve the relevant data.

It then analyzes the data to extract further insights.

LitenAI performs several cycles of agentic reasoning to generate a list of insights. It then aggregates all relevant data and presents a comprehensive view of the issue.

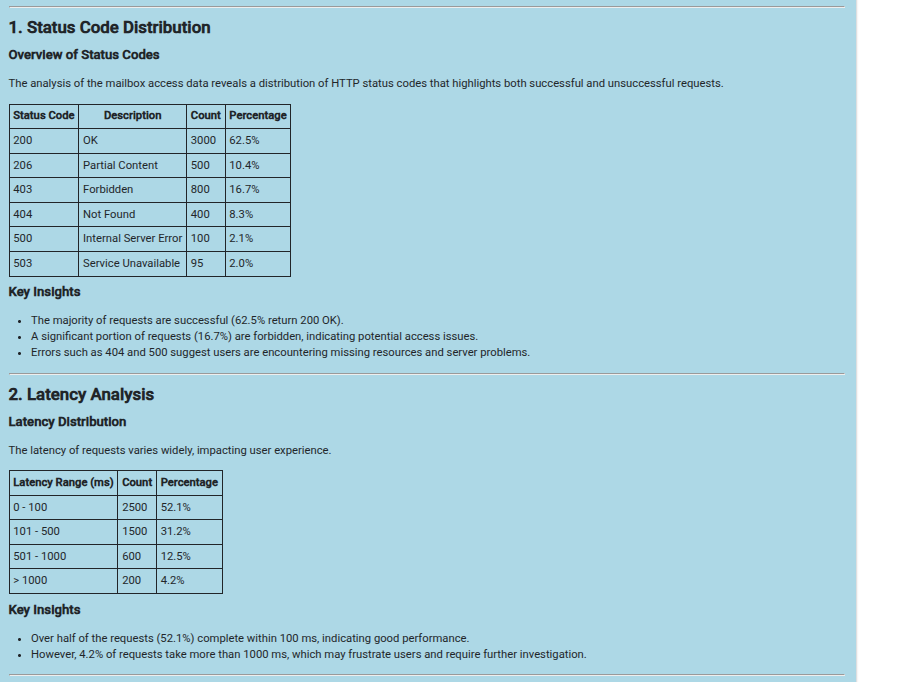

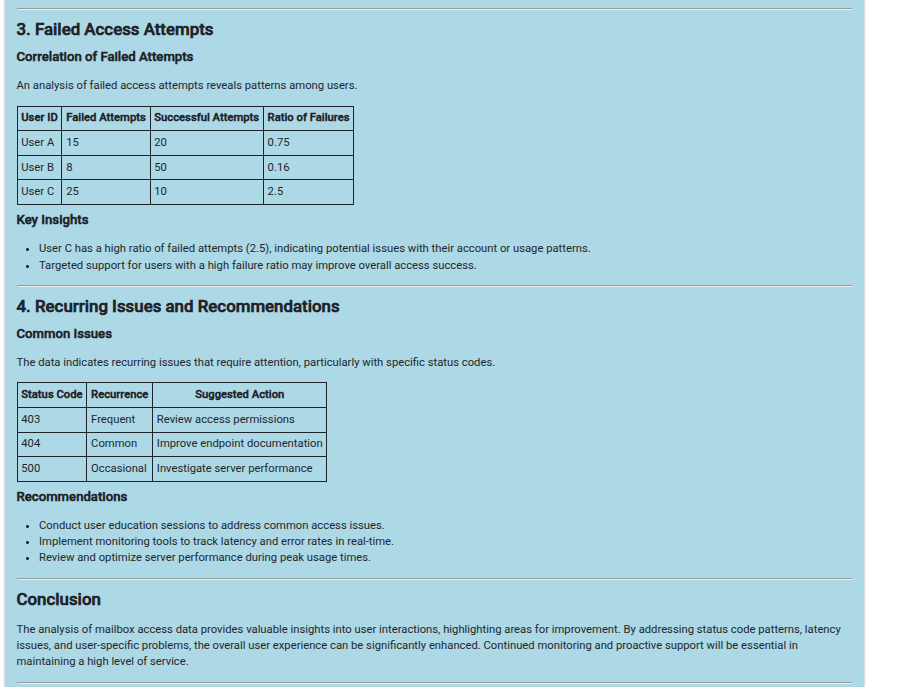

In this case, the system surfaces key insights on status code errors, latency trends, failed access attempts, and recurring issues.

See below for a sample report.

Additional insights are listed below.

The Research Agent is highly effective at combining general knowledge with customer data and context to generate meaningful insights. It helps identify potential issues early, enabling proactive resolution.

🧪 Try It Yourself

You can drop your prompts, …and LitenAI agents will do the rest.